AI and mental health

The last half of the year I went silent. Claude Code went mainstream and got so good that now it just works in many cases without the need of some tips and tricks. Initially, I felt good about being right about the tool but quite soon I got… depressed.

My monkey brain extrapolated where things were heading and I got into the infinite loop of doom and end of the world.

The narrative “AI won’t replace developers, developers with AI will replace developers without it” started appearing as a denial phase:

LinkedIn got a burst of posts about people vibecoding full apps in a matter of a few evenings.

Articles about ChatGPT solving math problems started popping up one after another in a matter of days.

Linus Torvalds posted on GitHub that he vibecoded an audio visualizer.

DeepMind Chief AGI Scientist tweets about incoming AGI in 2028 with 50% chance in the thread about hiring Senior Economist for post-AGI economics research.

From every corner you can hear that Software engineering is DONE, IT’S OVER, come work at McDonald’s!

And just like that you find yourself almost having a panic attack.

Well, as an undisputed world boxing champion once said: “Don’t push the horses!“!

The first thing I did to calm down was focusing on what I can control.

If AGI is here in 6 months/1 year/2 years, there are literally zero things I can do to prevent it. The amount of investments in AI in 2025 was 200 billion+, we had comparable investments only in the Dot-Com bubble, thanks to which we have amazing cheap internet nowadays. So if it’s possible at all to reach AGI based on LLM-architecture, humanity for sure will reach it and I cannot do anything about it.

Can I prepare for it? Not really. The richest companies are hiring the best minds of the world to do analysis and prepare for possible outcomes, odds are not in my favor. No point in spending time and energy here.

What if AGI won’t happen in the next 5 years?

In this case we have to deal with the fact that we got a new tool that can write code quite well based on provided human text. As any tool, it will get cheaper and better with time.

How does it impact the industry?

Any automation decreases the number of jobs in an industry. Thanks to LLM’s tendency towards hallucinations and sometimes very good plausible but completely wrong answers, you need an expert in the domain area to validate whatever it produces. If you dig deeper into articles about ChatGPT solving math problems you will discover that in many cases solutions existed before but were not properly published and ones that were really solved didn’t have novel approaches at all. Without human experts we would be giving Nobel Prize to AI every day for slop.

Ok, so we need to have an expert, who are the primary experts in Software development? PM + Technical lead. Now PM can do way more things, what required Tech lead in the past now can be done with LLM. It might not be obvious but the same applies to the Tech lead, now they can do a lot of PM’s job way easier as well as getting information about the domain and edge cases became trivial as well. So it’s highly likely we will see a merge of these roles into one. There is quite a strong case for devs being PM in a technical product, like development of a new database. A non-technical user is highly unlikely to have enough expertise to understand the problem and demand for a new solution in the first place. Such products are usually built with low-level programming languages like C++/Go/Rust, so instead of switching fully to being electricians it’s worth considering switching to these languages first.

What about other roles?

Using LLM as a code writing machine already made a good chunk of devs in fact QA specialists. Many companies have eng. policy “you wrote it - you own it”, which means infrastructure and maintenance on devs as well. Throw the ultimate teacher that can explain everything in the picture and it becomes quite obvious that we will see a transition of the role towards something more generic, like Automator/Integrator, where it would be expected to cover entire solution end-to-end.

Will we need fewer automators than software devs we currently have?

Instinctively, you want to say yes. However, there is a possibility that:

Expectations from the software will increase. Now everyone can produce low-quality MVP in a matter of hours. There is already a surge of new apps to Apple App Store. There are rare success stories about making good money out of it because even if an app has a good idea, it’s easy to copy it. So, everyone is and will be looking to get an upper hand.

Currently, I see people betting on distribution (marketing), which is a valid point, however I feel like reliability would be among top requests from users after we get fatigue from buggy software. The success path was always the easy part of software engineering. The last 10% of any project were always the most challenging and required the most effort. I bet not everyone enjoys choosing trade-offs and thinking about edge cases on a daily basis.

The number of lazy people will just increase as more and more people will delegate thinking to AI. The truth is that all information was available to everyone for quite some time thanks to the internet but we still have travel agencies and consultants. Even if the research part becomes trivial you still need to use your brain to ask right questions, that’s where expertise comes in handy. And that’s what people will be paying for “think instead of me“.

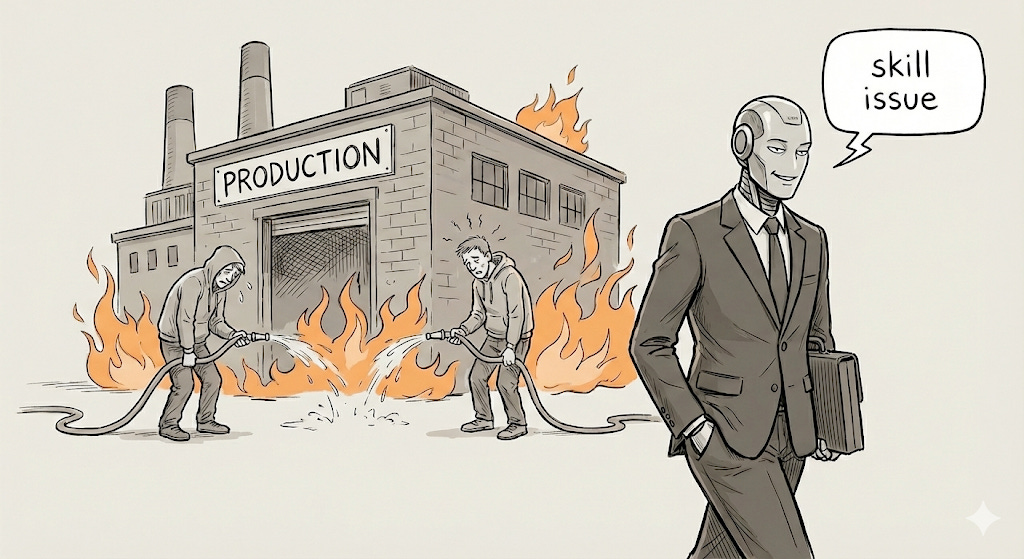

Several huge security incidents will happen due to LLM/AI agent flaws (e.g., prompt injection). LLM providers won’t have any liability and answer that it was a “skill issue“ of one using their product.

To some extent it’s already happening. For instance, after the rollout of Claude Codex Boris Cherniy was flexing that all of it was written by Claude Code itself. But Codex had many issues, so users on Reddit were joking that “you can tell it was built by AI“, on top of that it had some serious security issues but Anthropic said it’s the responsibility of the LLM users.

Obviously business owners don’t want to deal with this stuff and would hire someone to be responsible for this.

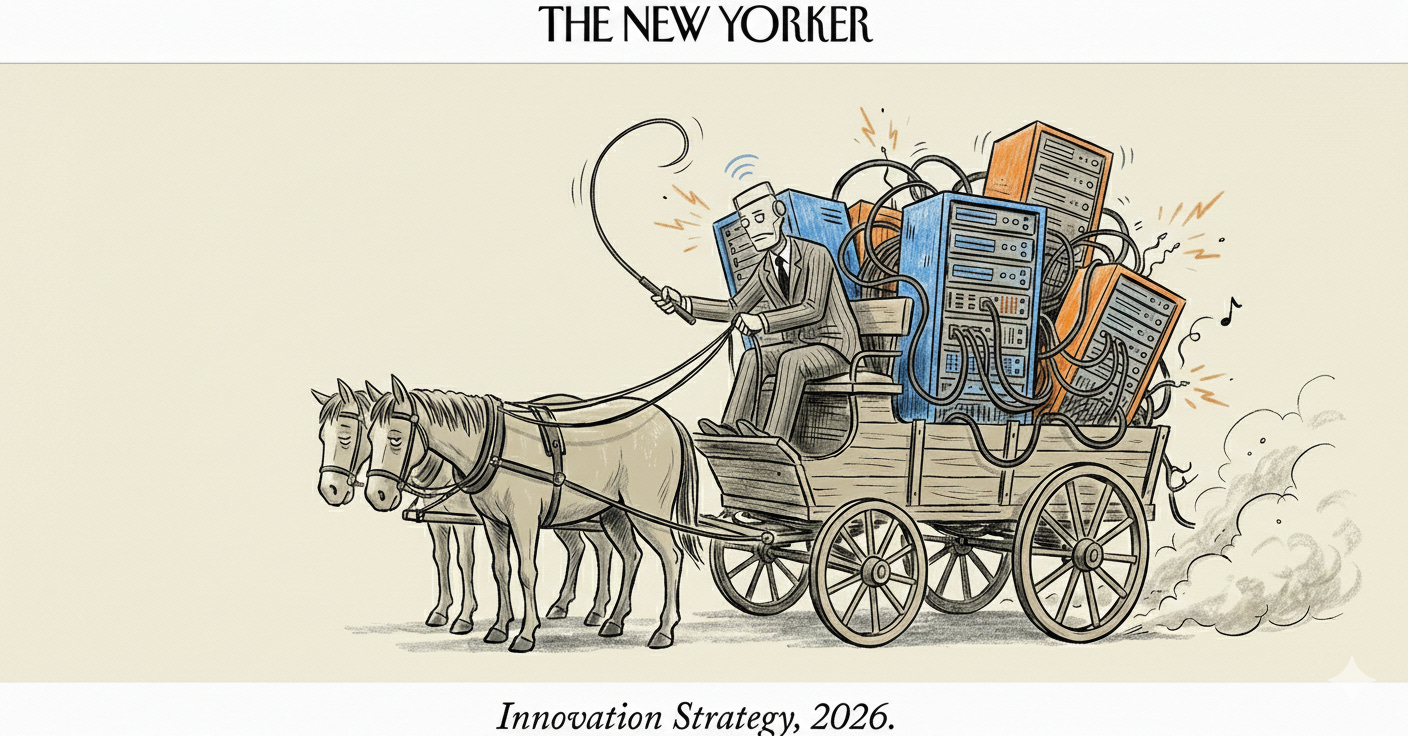

Every company will build all software tooling in-house, as there is no point in paying for SaaS anymore right? They will learn about maintenance costs quite quickly, so they might just hire an automator to manage all of it. Turns out “we’ll just build it ourselves” hits different at 4am when prod is down and you got sued by thousands of angry customers.

In my opinion, these reasons won’t be enough and the number of automators would be lower until we have immense complication in software products. We just won’t have a sharp drop in numbers.

So the best thing to do is to become such an automator already. For that one needs to forget about roles split (backend/frontend/devops/ml/security/mobile), you just do whatever is needed by the business, if you don’t know something - you have the best teacher in the world as long as you ask right questions. Asking right questions is what most senior devs do anyway, so you should be good at it already. The hardest adjustment for me was to accept that now it’s acceptable to solve problems in a sub-optimal way as long as you don’t spend time on it and throw more compute at the problem. There is even a technique for this called Ralph Loop. Here is a great post about the approach from Ralph Wiggum, the creator of this masterpiece. If you don’t have time to read it, here is a secret formula:

while :; do cat PROMPT.md | claude-code ; done

Congratulations, now you are a senior Ralph dev!

Ok, that’s great but you still have anxiety that AI will take your job next month?

Look at LLM providers’ actions not their words

OpenAI rolls out ads. In 2025 Sam Altman said that adding ads would be a last resort for them. If AGI is around the corner, why bother?

Anthropic’s CEO says that we won’t need developers in 6 months every 6 months. Meanwhile the company keeps hiring devs.

Why bother if you fire them in 6 months?

Because of these contradictions I prefer to believe Yann LeCun (one of three “Godfathers of AI/Deep Learning”) in this matter:

TLDR

Don’t stress over things you cannot control. If AGI is here, it’s a completely new world. You cannot prepare for a post-AGI world as no one knows what’s coming.

Develop yourself towards being Automator/Integrator, don’t be attached to your current job title. Learn Rust and apply Ralph loop on all problems you see around you.

Look at actions not words of LLM providers.

Breathe out and remember that asking right questions and thinking is not what everyone loves to do, so there will always be demand for these until AGI-era arrives.